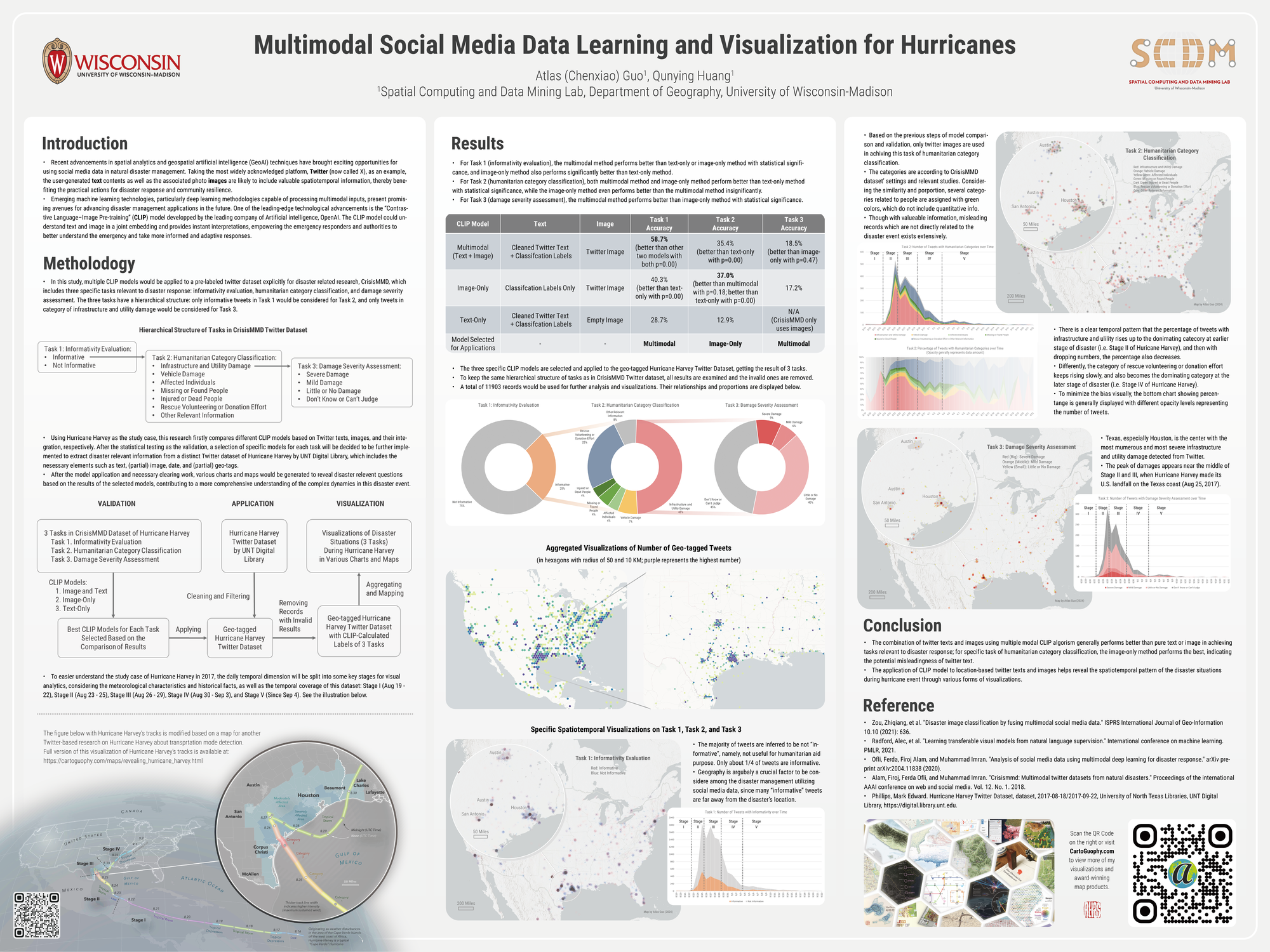

Multimodal Social Media Data Learning and Visualization for Hurricanes

Research poster presented on CaGIS + UCGIS Symposium 2024

Download Compressed Image

Download Compressed Image

Download Full-Size Image

This is the poster presentation for CaGIS + UCGIS Symposium 2024.

Abstract:

The recent advancements in spatial analytics and geospatial artificial intelligence (GeoAI) have

unveiled new horizons for leveraging social media data in realm of natural disaster

management. Focusing on Twitter (now X), a widely recognized platform, this research highlights how

user-generated textual contents and associated images can offer invaluable

spatiotemporal insights, aiding in disaster response efforts and bolstering community resilience.

Despite the acknowledged potentials of social media data in emergency management,

there is an evident gap in comprehensive studies that integrate GeoAI and cartographic visualizations to

swiftly mine and utilize this data for enhancing situational awareness.

The advent of cutting-edge machine learning technologies, especially those facilitating the processing

of multimodal data, suggest significant prospects for enriching disaster

management applications. A prime example of such innovation is the Contrastive Language鈥揑mage

Pre-training (CLIP) model developed by OpenAI, which synergizes text and image analysis

in a unified framework, thus enabling emergency responders and decision makers to gain a deep and more

nuanced understanding of crises for timely and adaptive interventions.

This study employs multiple hurricane incidents to evaluation and compare the effectiveness of various

machine learning models in processing and integrating Twitter鈥檚 text and image

data. The findings affirm that the multimodal approach, particularly through the use of the CLIP model,

excels in performing crucial tasks relevant to disaster management such as

informativeness evaluation, humanitarian category classification, and damage severity assessment. After

the validation process, the proposed multimodal method is then implemented to

extract disaster relevant information from a distinct Twitter dataset collected during Hurricane Harvey

in 2017. The enumerated results of tweets, integrated with spatiotemporal

information, are utilized to generate a suite of static and dynamic cartographic visualizations, which

serve to reveal a more comprehensive understanding of the complex dynamics in

this disaster event.

In conclusion, this research demonstrates that the application of CLIP multimodal data mining method

stands out as a powerful tool for integrating the user-generated texts and images

in social media, enhancing the disaster management capabilities by offering crucial information

extractions and cartographic visualizations, paving the way for future advancements in

the field of natural disaster management.